tokum.ai

TRUE INTELLIGENCE BEGINS WITH COMPREHENSION

tokum.ai reveals what nature has always known:

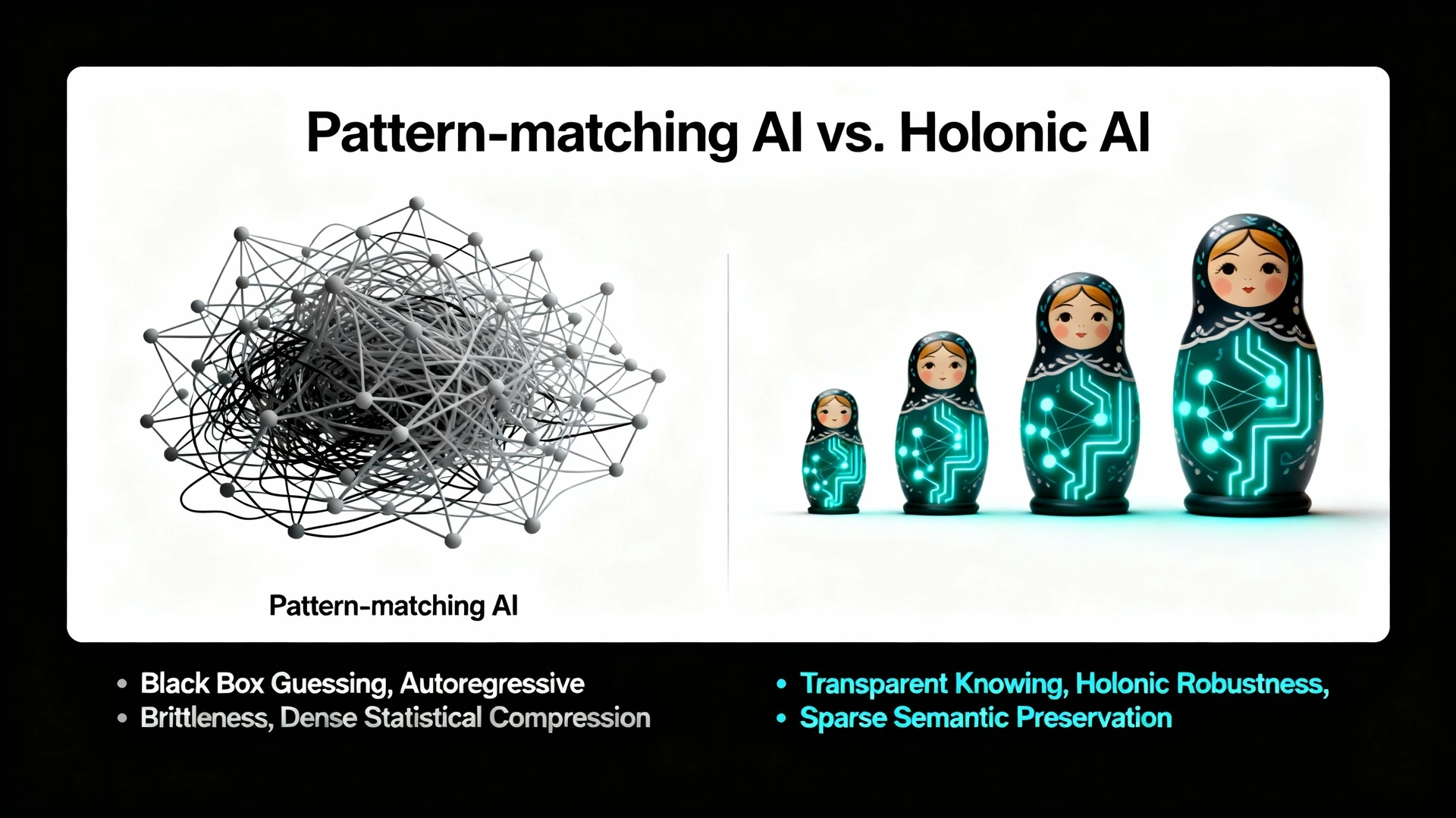

Holonic Architecture is the secret blueprint for handling infinite complexity with elegance and efficiency.

The Reality Hidden in Plain Sight

What does a living cell have in common with a forest ecosystem? What connects your immune system to a thriving civilization?

There is a universal principle—one that nature has perfected over billions of years, that evolution uses across every scale from the microscopic to the cosmic.

Yet for decades, mainstream AI has been fundamentally blind to it.

This principle is called the Holon.

What is a Holon? The Paradox That Defines Life

In 1967, Arthur Koestler coined the term "holon" to describe something remarkable he noticed everywhere in nature: entities that are simultaneously complete wholes AND integral parts of something larger.

Consider a single cell in your body: It is a complete, autonomous system. A cell is whole. Yet it is also a tiny part of a tissue, organ, system, and you.

A cell is both self-assertive and integrative. This paradox is not a weakness—it's the source of life's extraordinary adaptability, resilience, and intelligence.

Look everywhere:

Biological Minds: Individual neurons are complete units that form thoughts only through vast, recursive networks.

Language: A word is a defined entity, yet its true meaning emerges only when it becomes part of a sentence.

Ecosystems: Each organism is autonomous, yet depends on the entire web of relationships.

Organizations: Workers are autonomous; teams are wholes that are parts of departments, that are parts of companies.

Every complex, adaptive, intelligent system is built holonically.

The Error That Broke AI: Description Logic's Fatal Flaw

When computer scientists built artificial intelligence, they inherited a constraint from formal logic: description logic.

This rule demanded: An entity must be either individual or collection—but never both.

But the real world is all holons—paradoxes all the way down.

We actually solved this once before—in isolation. Visionary architectures like Smalltalk and Lisp proved decades ago that computers can think in holons. By treating code as data and objects as recursive structures, they successfully overcame the "Description Logic Limitation" on a local level.

The catch? They were Islands of Coherence. These systems solved the paradox only for the Single-User, Single-Machine environment. They lacked the protocol to scale that recursive wisdom to a connected world.

Because mainstream AI ignored these architectural truths to chase raw scale, it was forced to flatten reality into a one-dimensional sequence.

The Fundamental Crisis: Meaning Collapsed Into Noise

This creates one fundamental problem manifesting in multiple ways: Classical AI cannot distinguish signal from noise because it compresses all meaning into dense numerical matrices.

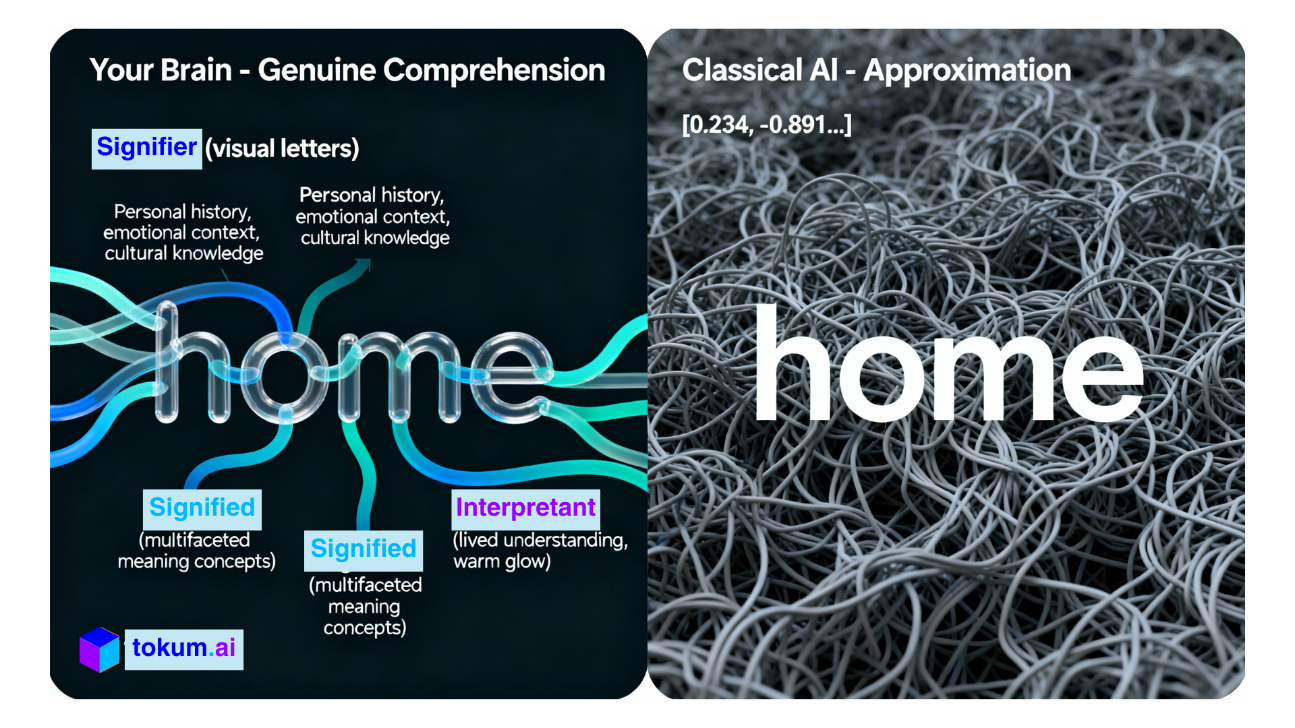

When you read the word "home," your brain performs invisible alchemy. Those four letters trigger:

Visual recognition

Phonological activation (sound)

Semantic memories (childhood, safety, belonging)

Emotional resonance (comfort or pain)

Cultural context (shelter, nation, identity)

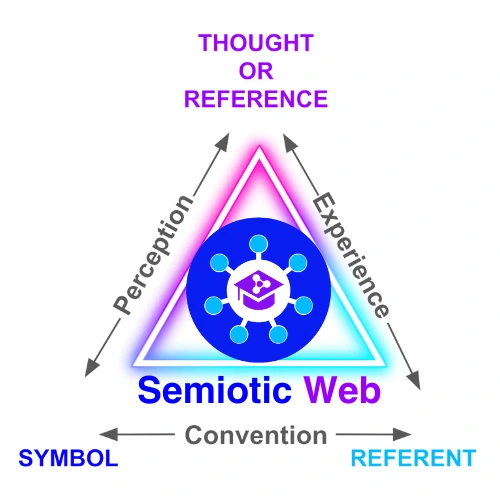

Your brain makes meaning through semiosis—the dynamic creation of understanding through a triangulation of:

The Signifier (the symbol: letters, sounds, images)

The Signified (what it refers to: the concept)

The Interpretant (your understanding, shaped by context and experience)

This process is recursive and multidirectional. The same signifier triggers radically different meanings depending on who interprets it and in what context.

Classical AI severs this connection.

By flattening this rich triangle into a flat list of numbers (vectors), the system deletes the Interpretant. It loses the "Who" and the "Why," leaving only a statistical shadow of the "What."

The system becomes legally and logically blind. It cannot distinguish:

Facts from Guesses (Hallucinations are treated with the same confidence as history)

Universal Truths from Personal Context (A definition of "Risk" for a bank is mixed with "Risk" for a board game)

Causality from Correlation (Forward reasoning is indistinguishable from backward rationalization)

Meaning from Noise (99% of the matrix is uninformative statistical static)

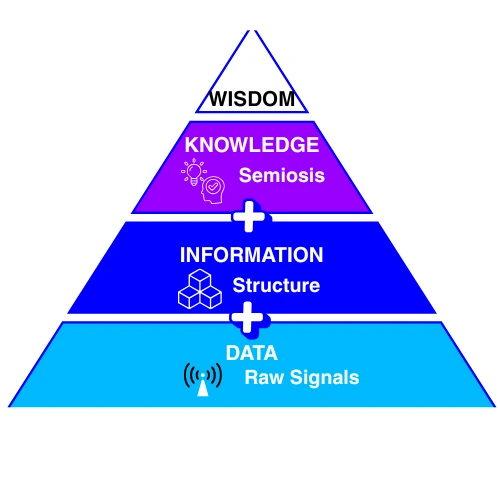

From Data to Information to Knowledge

To understand why modern AI hallucinates, we must understand the hierarchy of understanding:

Data = Raw Signals. (Pixels, characters, sensor readings). NO Context.

Information = Data + Structure. (Sentences, databases, spreadsheets). NO Meaning.

Knowledge = Information + Semiosis. (Context, provenance, and intent). TRUE Comprehension.

Mainstream AI hits a glass ceiling at Information. It is excellent at organizing data into structure (syntax), but it is chemically unable to achieve Knowledge. Why?

Because Knowledge requires the Interpretant—the specific context of who is knowing, when, and why. By compressing reality into static vectors, Classical AI strips away the Interpretant. It offers a sophisticated simulation of knowledge, but fundamentally remains a "Stochastic Parrot"—mimicking the shape of information without accessing its soul.

The Semiotic Web operates above the glass ceiling. It does not just store the "Fact." It captures the Semiosis—the living, dynamic relationship between the symbol, the concept, and the context.

Introducing the Foundation: The Building Blocks of Understanding

To rebuild AI on a holonic, semiotic foundation, tokum.ai created four interconnected components that work as one integrated system:

1. The Semiotic Web: The Architecture

The Semiotic Web is the unified architecture that brings all components together into a coherent system for machine comprehension.

It operates on a fundamental principle: Meaning is not stored—it's preserved through semiosis.

Unlike the classical web (documents connected by links) or classical AI (tokens compressed into matrices), the Semiotic Web encodes meaning explicitly, holonically, and bidirectionally, with temporal and causal dimensions preserved through Semantic Spacetime.

2. Tokums: The Atomic Units of Comprehension

A Tokum is not just a database ID or a vector weight. It is the SHA-256 fingerprint of a Concept, generated by hashing a Canonical URN (Uniform Resource Name).

The Semantic DNA

The SHA-256 hash is the cryptographic label for each tokum—its unique identifier. This label points to the tokum's complete semantic definition: what it is, what it serves, and what it connects to. The "semantic DNA" is accessed by querying the HCNV-ColBERT matrix, which decodes the label back into meaning.

Radical Holonistic Versatility

The power of a Tokum lies in its ability to shapeshift while maintaining its cryptographic identity. Because it is a universal hash, the exact same Tokum can function simultaneously as:

A Node in a Knowledge Graph

An Edge defining a relationship

A Row or Column in the HCNV Matrix

A Intersection storing a weight

A Primary or Foreign Key in a Relational Database

This is how we overcome the "Description Logic" limitation: the Tokum is never just a part or a whole—it is fluidly both, depending on how you view the system.

Canonical Comprehension Units

Meaning is constructed through four specific types of Tokums:

| Unit | Name | The Function |

|---|---|---|

| CCI | Canonical Component Identifier | The Atom. The unique hash of a discrete entity (e.g., "Paris", "Temperature"). |

| SST | Semantic Spacetime Type | The Context. The metadata defining when and where this truth exists. Crucially, this is embedded into every subsequent structure. |

| CPI | Canonical Pairwise Identifier | The Bond. We do not just link two things; we fuse them. We generate Two Distinct Pairs for every relationship: 1. Subject-Predicate (CPI-SP) 2. Predicate-Object (CPI-PO) Both pairs encapsulate the exact same SST, ensuring context is never lost. |

| CTI | Canonical Triple Identifier | The Molecule. The complete, unbreakable semantic statement (Subject-Predicate-Object) derived from the union of the CPIs and the SST. |

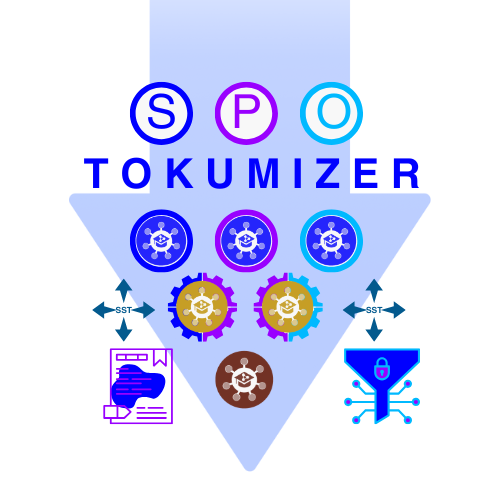

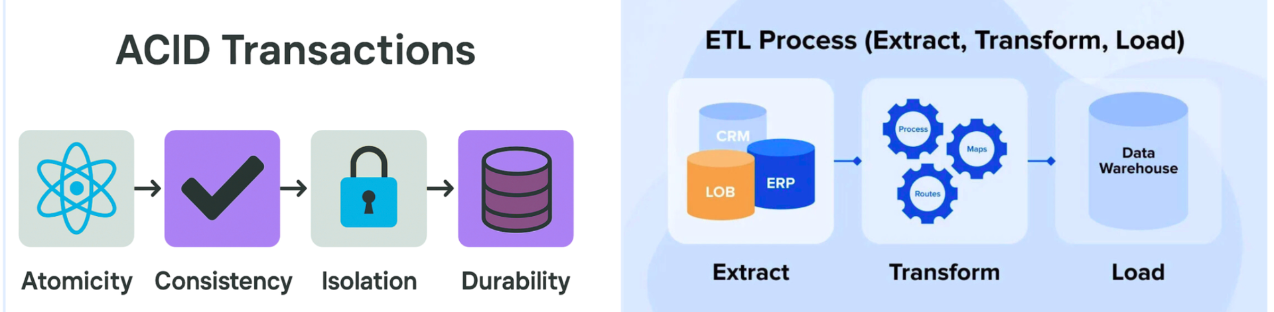

3. The Tokumizer: The ACID ETL for Semantic Knowledge

The Process Flow

Step 1: Ingestion & Provenance (The Anchor)

The system ingests a single RDF Triple. Before processing, it isolates the Source IRI and locks it into a Reverse Index.

Result: Full traceability. Every future Tokum can be reverse-engineered to find exactly where it came from.

Step 2: Canonicalization & Hashing (The Identity)

The system assigns the Semantic Spacetime Type (SST) to define the context. It then converts the Subject, Predicate, Object, and SST into Canonical URNs. These URNs are hashed (SHA-256) to create the fundamental CCIs.

Step 3: The Holonic Fusion (The Construction)

Here is where the "DNA" is built. The system does not just concatenate strings; it fuses identity, context, and security.

Salt Injection: A System HMAC Salt is added to control security.

The CPI Split: The system generates the two vital bonds:

CPI-SP: (Subject CCI + Predicate CCI + SST + Salt)

CPI-PO: (Predicate CCI + Object CCI + SST + Salt)

Note how the SST is present in both. The context is ubiquitous.

Step 4: The Recursive Load (The Output)

The CPIs and SST combine to form the final CTI. The Tokumizer outputs Two Fully Tokum-Based RDF-Star Triples:

The Definition: << CTI >> hasSST <SST Tokum>

The Origin: << CTI >> hasSource <Source Tokum>

These automatically generate (or update) entries in the HCNV-ColBERT Matrix and the Hypergraph simultaneously.

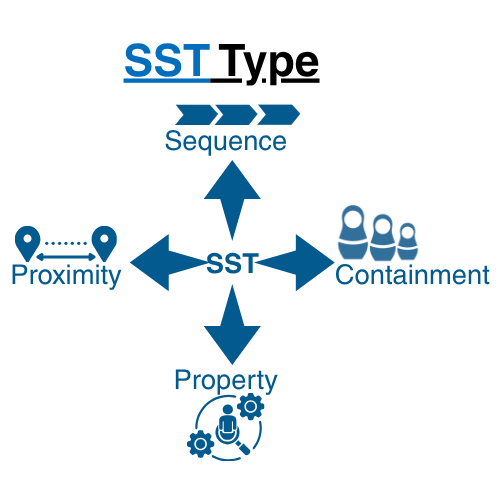

4. Semantic Spacetime: The Four Relationship Types

The Semiotic Web preserves more than facts—it preserves how those facts relate to each other and when they matter.

Every tokum relationship is expressed through one of four fundamental SST (Semantic Spacetime) types:

NEAR (Semantic Proximity): Concepts that are similar, contextually adjacent, or semantically related

LEADS TO (Causality): Facts that cause other facts; temporal and causal sequences

CONTAINS (Composition): Holonic nesting; parts within wholes, members within systems

EXPRESSES PROPERTY (Attribution): Qualities and characteristics that define or describe entities

Each SST type is itself a tokum. The salted HMAC enables clustering these four SST tokum types into distinct regions of the HCNV-ColBERT matrix. This clustering facilitates navigation and promise-making between Agents of Comprehension.

The source metadata for these relationships—who asserted them, when, from what origin—is not stored in the tokum itself. Instead, it's accessed through the reverse index linked to the CTI (Canonical Triple Identifier), ensuring both semantic clarity and access control through the HMAC salt.

This is what makes meaning actionable rather than merely stored.

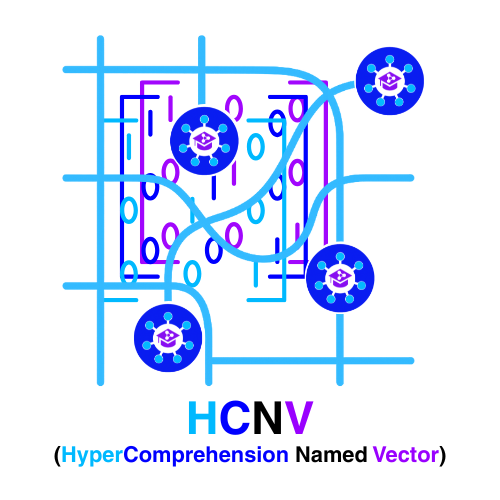

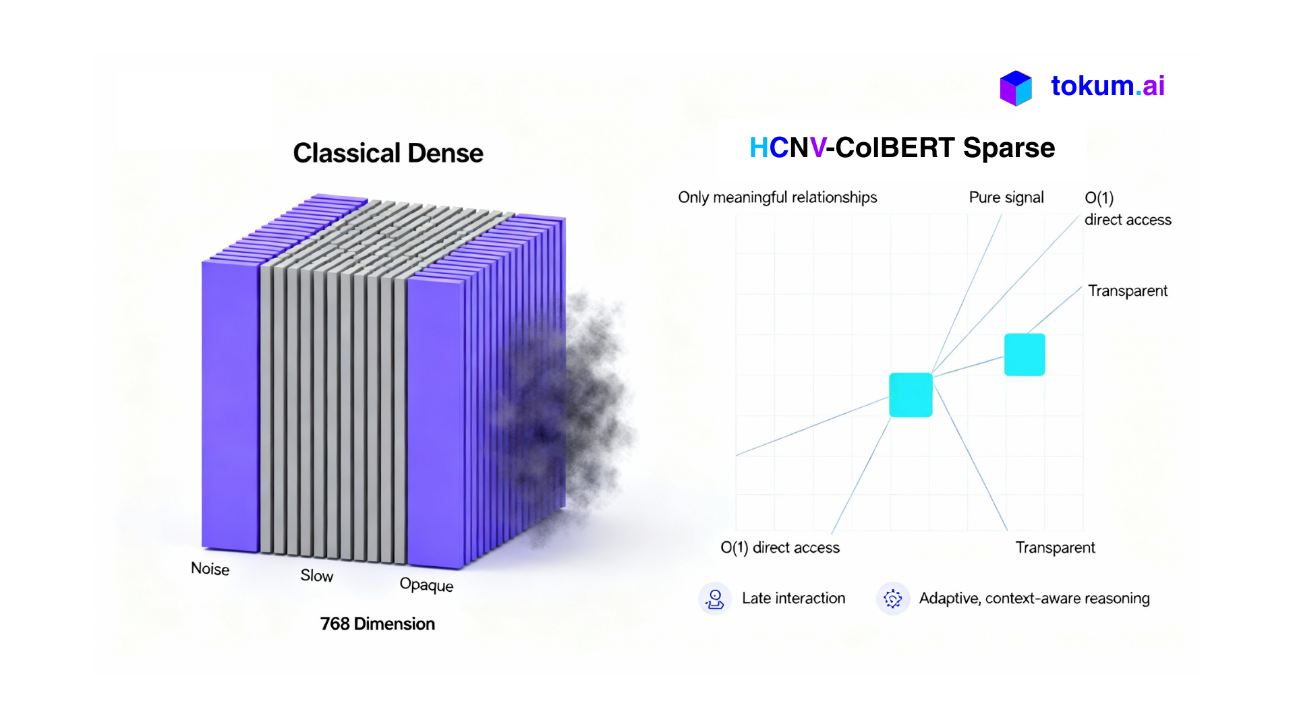

5. HCNV-ColBERT: The Holonic Semantic Engine

Classical AI operates on Dense Embeddings: billions of abstract weights compressed into a black box. If you add new knowledge, the entire mathematical balance shifts—often forcing a complete, expensive retrain.

HCNV (HyperComprehension Named Vectors) replaces this abstract math with a precise, structural approach.

It is a multi-vector system that enables late interaction—meaning relationships between concepts are computed at retrieval time, not stored densely.

The Structural Breakthrough: A Matrix of Holons

This late interaction is powered by a fundamentally new data structure. The Sparse Matrix is not just numbers; it is Holonistic.

The Rows are Tokums (Concepts).

The Columns are Tokums (Attributes).

The Intersections are Tokums (Explicit Relationships).

Because every parameter—row, column, and intersection—is an explicit, hashed Tokum rather than an arbitrary weight, the system gains Infinite Additive Capability.

Knowledge Without Recalculation In Classical AI, adding a fact requires re-balancing the whole network. In the Semiotic Web, you simply insert the new Tokum.

Zero Displacement: You can insert a new concept (Row) or attribute (Column) without the need to recalculate its position against all others.

Zero Retraining: The matrix expands natively, preserving the stability of existing knowledge.

Instant Comprehension: The moment a Tokum is added, it is universally addressable and ready for late interaction.

The result is a sparse semantic matrix (99.75% empty) where every non-zero entry is a Traceable Truth, not a statistical guess.

The result:

20,000× faster reasoning

400× lower energy consumption

Complete auditability (every connection is explicit and traceable to SHA-256)

Lossless meaning (nothing compressed away)

Adaptive comprehension (relationships computed contextually at query time)

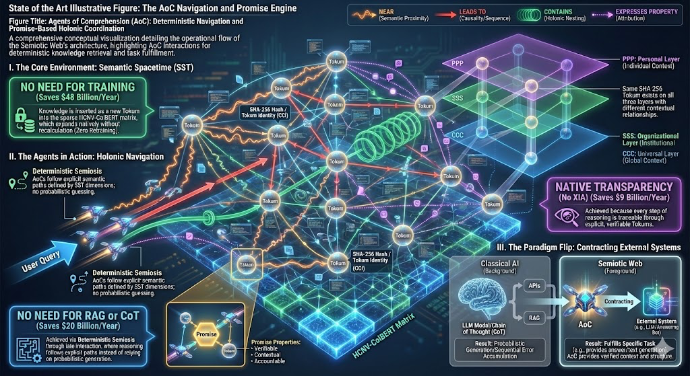

6. Agents of Comprehension: Navigation and Promise-Making

Agents of Comprehension (AoCs) are the fundamental elements to implement Holonicracy. They represent a fundamentally different class of AI agent, one that is built for understanding by design. Unlike systems that statistically generate text, AoCs are engineered to navigate semantic spacetime. They embody the spirit of Holonic Architecture—the blueprint nature uses to handle infinite complexity with elegance and efficiency.

AoCs achieve coherence and coordination by integrating with other systems through the coordinate system of Semantic Spacetime (SST) and the operating principles of Promise Theory.

Navigation and Promise Theory: Implementing Holonicracy

In the Semiotic Web, AoCs are autonomous and connected entities. They traverse the four SST dimensions (NEAR, LEADS TO, CONTAINS, EXPRESSES PROPERTY) to understand the explicit relationships between tokums. These agents do not predict the next word; rather, they follow explicit semantic paths through the hypergraph.

The mechanism enabling autonomous cooperation is Promise Theory, developed by Mark Burgess. When an agent needs to answer a question or perform a task, it navigates the SST dimensions to find tokums that are semantically compatible with its goal. The agent then makes a promise to connect these tokums in a specific way, asserting a new relationship or confirming an existing one.

Because every tokum is cryptographically signed and every SST relationship is traceable, these promises are:

- Verifiable - The path through semantic spacetime can be audited.

- Contextual - Agents navigating different layers may make different, compatible promises.

- Accountable - Promises that prove false affect the agent's credibility.

This framework ensures that properties and order emerge from relationship and position within semantic spacetime, achieving decentralized coordination without centralized control.

AoCs in Action: Flipping the AI Paradigm

Current Agentic AI typically requires LLM models that utilize Chain of Thought (CoT) technologies to activate APIs and produce RAG systems. The Semiotic Web flips the central role of LLMs.

In our architecture, the LLM does not command the AoCs. Instead, AoCs, being autonomous and connected, contract with external systems (including AI answering bots or any software) to fulfill specific tasks, such as providing the answer to a query.

This deterministic, navigation-based approach allows the Semiotic Web to replace costly and inefficient processes associated with classical AI models:

| Classical AI Process | Cost Savings (per year) | Semiotic Web / AoC Explanation |

|---|---|---|

| Training | $48 billion | AoCs operate within the HCNV-ColBERT sparse matrix. Knowledge is gained without recalculation because you simply insert the new Tokum. The matrix expands natively, preserving the stability of existing knowledge, eliminating the need for expensive retraining. |

| RAG or CoT | $20 billion | AoCs achieve deterministic semiosis through HCNV-ColBERT late interaction, reasoning by following explicit paths rather than relying on probabilistic generation or sequential error accumulation. |

| XIA (Explainable AI) | $9 billion | The system achieves native transparency. Every step of reasoning is traceable through explicit tokums with verifiable provenance. Therefore, AoCs provide inherent auditability, bypassing the need for post-hoc explanation layers. |

Since AoCs have near O(1) deterministic access to any content, parameter, or element of context, and can autonomously navigate the map of meaning, proprietary and centralized dense information can be bypassed. This allows traditional systems to concentrate on what they excel at, such as text generation and creativity.

The Theoretical Foundations of Comprehension

The power of AoCs is rooted in fulfilling the requirements identified by visionary thinkers, including the nine theoretical giants.

- Honoring Arthur Koestler: Every tokum AoCs navigate is a holon, simultaneously possessing a cryptographic identity (the Self-Assertive Tendency) while nesting within larger structures (the Integrative Tendency). This ensures comprehension works through the hierarchical nesting of meaning, not flat collections of facts.

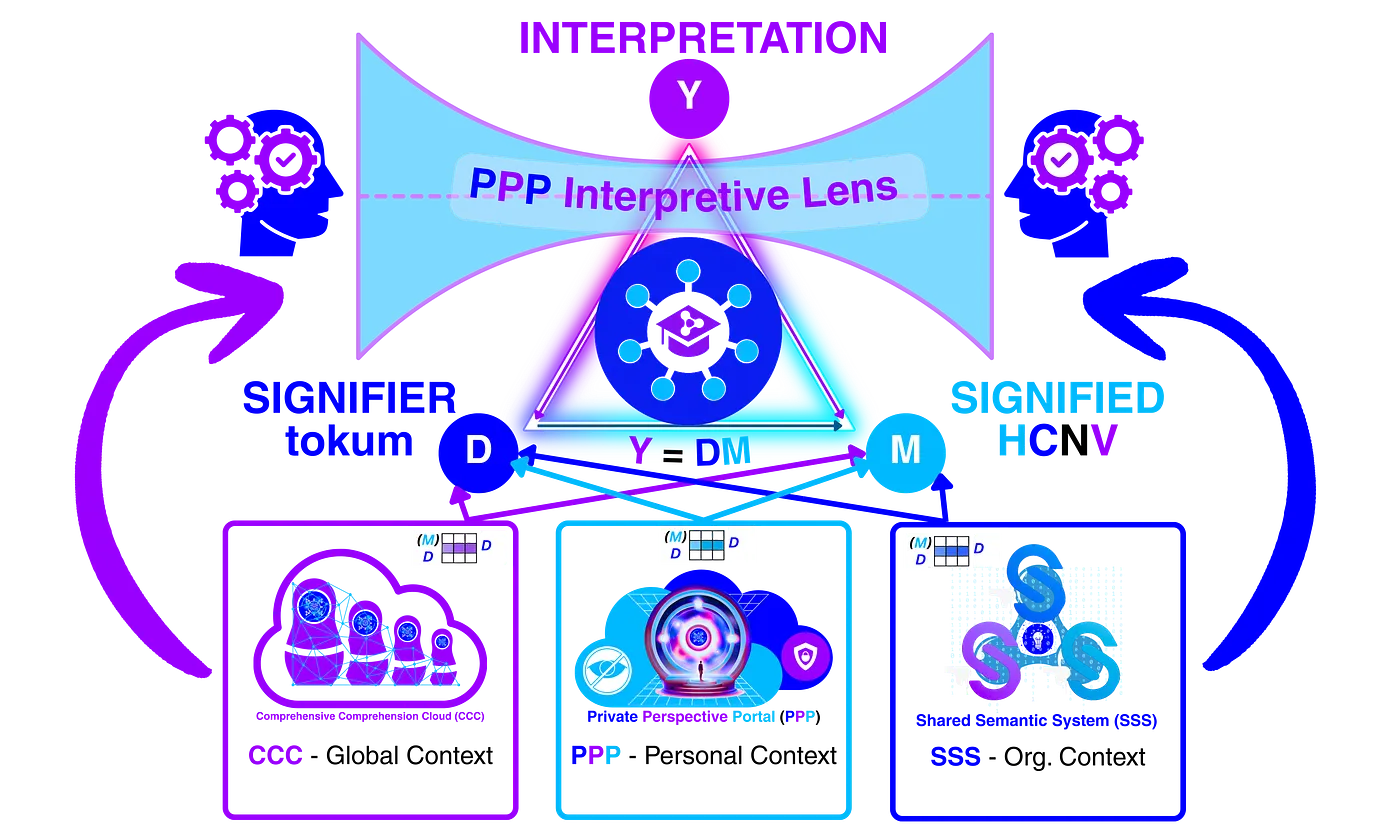

- Honoring Charles Sanders Peirce: AoCs ensure that meaning is preserved by explicitly encoding the Interpretant (the observer's context). This is achieved through the three-layer architecture—the Universal Layer (CCC), the Organizational Layer (SSS), and the Personal Layer (PPP)—which preserves the same SHA-256 Tokum with different meanings based on the interpretative context.

- Honoring Mark Burgess: AoCs directly implement Burgess’s theoretical physics. They navigate Semantic Spacetime as a coordinate system for meaning (Proximity, Sequence, Containment, Property) and coordinate through Promise Theory, creating systems of reliable, voluntary cooperation.

By integrating these foundational principles, AoCs enable adaptive comprehension, where relationships are computed contextually at query time. The system does not guess; neither can its agents.

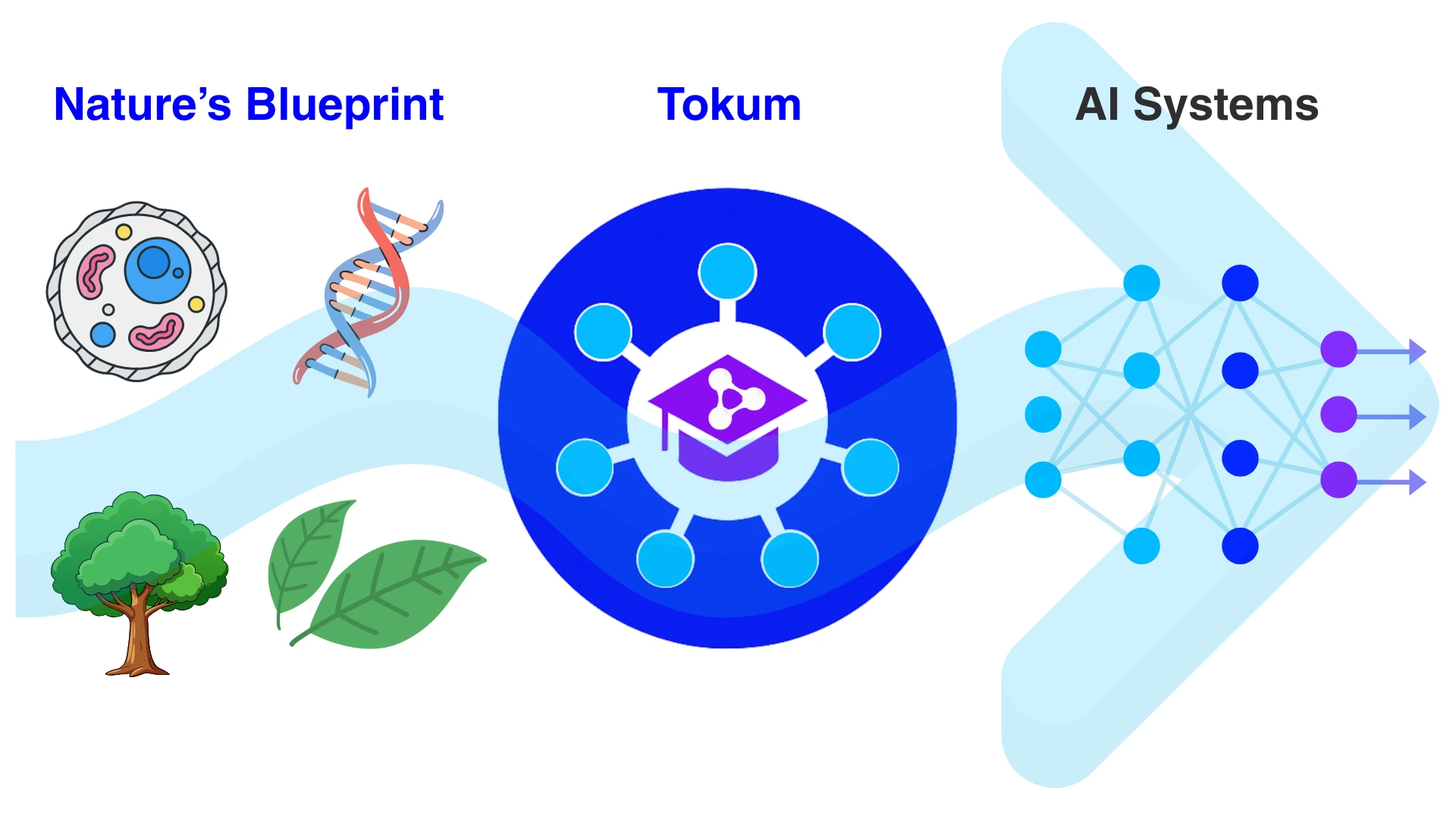

All the complex navigation and deterministic reasoning illustrated above can be simply summarized: the Tokum is the atomic unit of comprehension, acting as the cryptographic bridge that translates Nature's Holonic Blueprint into the foundational structure required to build genuinely comprehending AI Systems

Watch the Video:

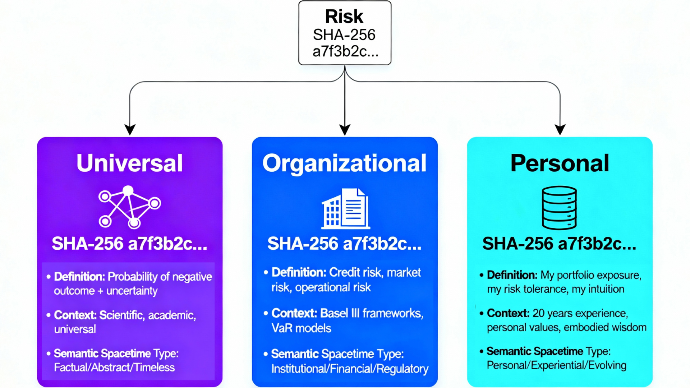

Introducing the Layers: Three Interpretative Environments

Here's where it becomes revolutionary. The same Tokum—with a single, permanent SHA-256 identity—can have fundamentally different meanings depending on which knowledge environment you're examining it in.

Imagine the word "risk." It means different things across contexts:

A universal definition (academic understanding)

An organizational interpretation (banking regulations)

A personal perspective (individual experience)

The Semiotic Web preserves all three meanings simultaneously through three distinct architectural layers:

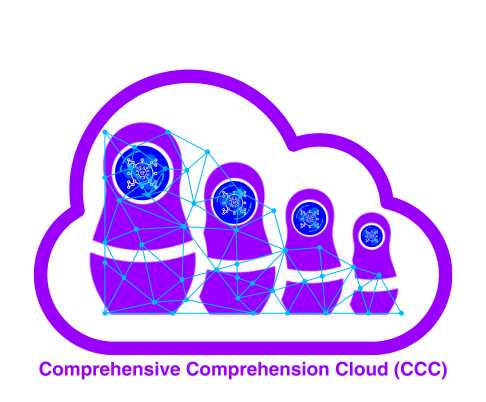

The Universal Layer: Comprehensive Comprehension Cloud

The first environment is global, verified understanding—universal definitions, facts, and principles accessible to all systems.

Universal definitions, facts, and principles

Knowledge verified against reality

Accessible to all systems globally

Common ground for reasoning

Think: Wikipedia of verified meaning—structured as Tokums with full provenance.

We call this the Comprehensive Comprehension Cloud (CCC).

The Organizational Layer: Shared Semantic System

The second environment is domain-specific, institutional context—how your organization, community, or industry interprets universal knowledge.

Domain terminology and frameworks

Organization-specific context and priorities

Collective expertise and institutional knowledge

Specialized understanding of universal concepts

Think: Your company's internal knowledge base—semantically connected to universal understanding.

We call this the Shared Semantic System (SSS).

The Personal Layer: Private Perspective Portal

The third environment is individual understanding—your persistent, private knowledge system that evolves with you.

Your personal learning and memories

Your unique perspective on shared knowledge

Your interpretative lens—shaped by your experience

Evolves as you learn and grow

Think: Your personal digital twin with persistent memory—cryptographically yours.

We call this the Private Perspective Portal (PPP).

The Revolutionary Feature: Same SHA-256 Tokum, Multiple Interpretations

The same Tokum with the same SHA-256 hash exists in all three layers—Universal (CCC), Organizational (SSS), and Personal (PPP)—simultaneously, but with different meanings based on interpretative context:

The interpretative lens: By comparing how the same SHA-256 Tokum is represented across all three layers—Universal, Organizational, and Personal—agents can understand not just what something means, but how it means differently across contexts.

How Knowledge Flows: Bidirectional Semiosis

Knowledge doesn't flow one way—it flows multidirectionally across all three layers:

Upward (Integration & Abstraction):

Personal insight (PPP) → shared with team (SSS) → potentially contributes to universal understanding (CCC)

Downward (Specialization & Application):

Universal principle (CCC) → specialized in your domain (SSS) → applied to your specific situation (PPP)

Sideways (Cross-Pollination):

One person's insight (PPP) fertilizes another's understanding. Teams share domain knowledge directly.

This is reciprocal semiosis at scale—how human understanding actually works.

How the Semiotic Web Solves Every Challenge:

| Challenge | Classical AI Problem | Semiotic Web Solution |

|---|---|---|

| Opacity | Black-box weights with no interpretability | Every Tokum (SHA-256) carries explicit semantic meaning—fully auditable and traceable |

| Approximation | Statistical guessing from billions of parameters | Deterministic semiosis through HCNV-ColBERT late interaction—reasoning, not approximation |

| Extractivism | Centralized tech giants control all value | Decentralized architecture with direct creator compensation (described below) |

| Lack of grounding | Language models float in abstract space | Multimodal Tokums ground concepts in verifiable reality, images, sound, sensor data |

| Autoregressive brittleness | Sequential error accumulation | Parallel reasoning through HCNV-ColBERT—no sequential collapse |

| Missing memory/planning | Context window resets; no persistence | Personal layer maintains persistent, evolving knowledge; Semantic Spacetime preserves temporal context |

A Once-in-a-Decade Architectural Breakthrough

The Ecosystem: Where Intelligence Becomes Real

[ILLUSTRATION: ECOSYSTEM]

Right column: Mindshare Matrix Marketplace (MMM) with creator icons and knowledge assets. Agents of Comprehension (AoC) traversing semantic pathways. Nodes representing verified Tokums with SHA-256 hashes.

When Tokums, the Tokumizer, HCNV-ColBERT, and the three layers work together, they enable an entirely new ecosystem:

Mindshare Matrix Marketplace (MMM)

Decentralized Knowledge Exchange

Knowledge creators encode their expertise into Tokums and list them for licensing. Every use triggers a micropayment to the creator.

Experts own their semantic contributions

Value flows to creators, not corporations

Knowledge becomes tradeable, verifiable digital assets (each Tokum is cryptographically secure with SHA-256)

Incentivizes sharing over hoarding

Welcome to the Age of Comprehension

This is a fundamental paradigm shift in how intelligence works.

For the first time, artificial systems will:

Preserve meaning, not collapse it into noise

Interpret contextually, adapting to Universal, Organizational, and Personal perspectives

Reason multidirectionally, across scales and environments

Explain themselves, with every connection traceable and cryptographically verified

Learn from multiple perspectives, combining global, institutional, and personal understanding

Serve people, not extract value from them

The age of black-box statistical approximation is ending.

The age of transparent, meaning-preserving, genuinely comprehending intelligence is beginning.